Azure Data Factory (ADF) is the ETL (Extract Transform Load) component of the Microsoft Azure environment. It is used to create pipelines to extract, modify and move data in and out of numerous data silos. In the case in point, it can be used to transfer data from or to an on-premises Oracle database.

However, to be able to use resources hosted on a private network, a Self-Hosted Integration Runtime (SHIR) needs to be installed first. This handles the connection to on-premises systems, such as an Oracle database. Readers are referred to the Microsoft documentation on installing and configuring a SHIR on a machine that has access to your network: here.

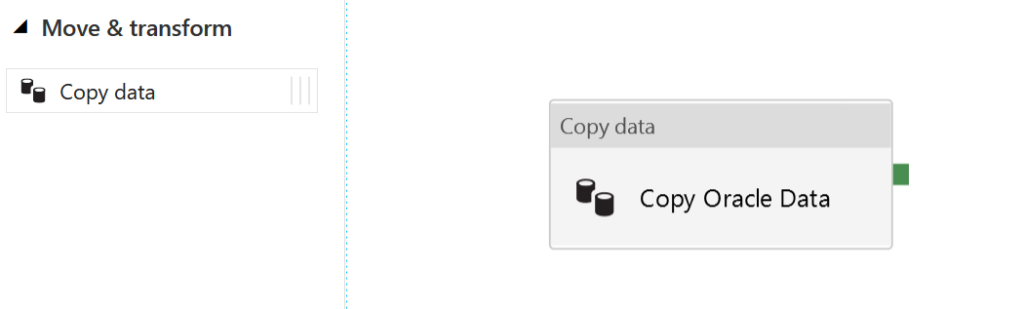

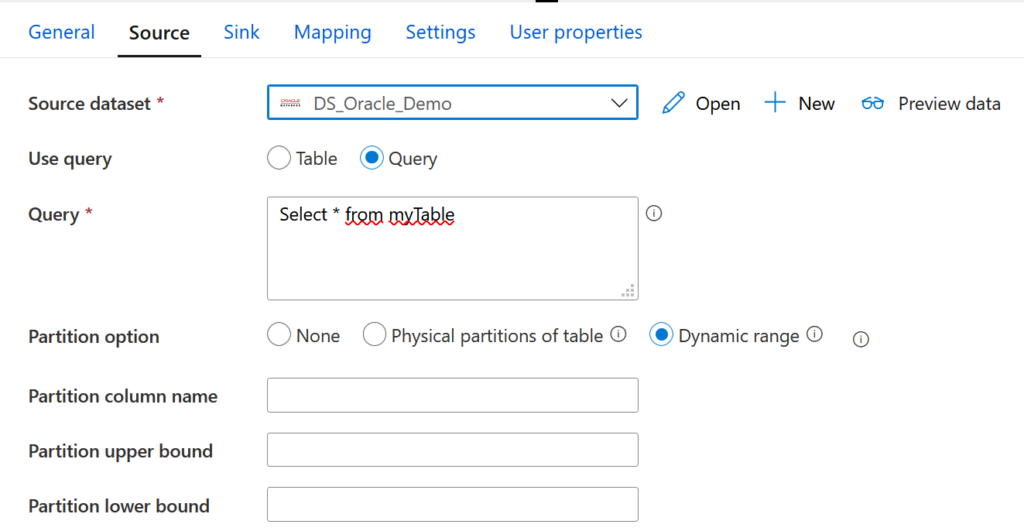

Once the SHIR is configured, you can connect to Oracle as to any other data source on ADF. For most data traffic, a simple copy activity will be sufficient. For our example, we are going to create a data flow from one Oracle database to another. To do so, after adding a new pipeline, select the “Copy data” activity.

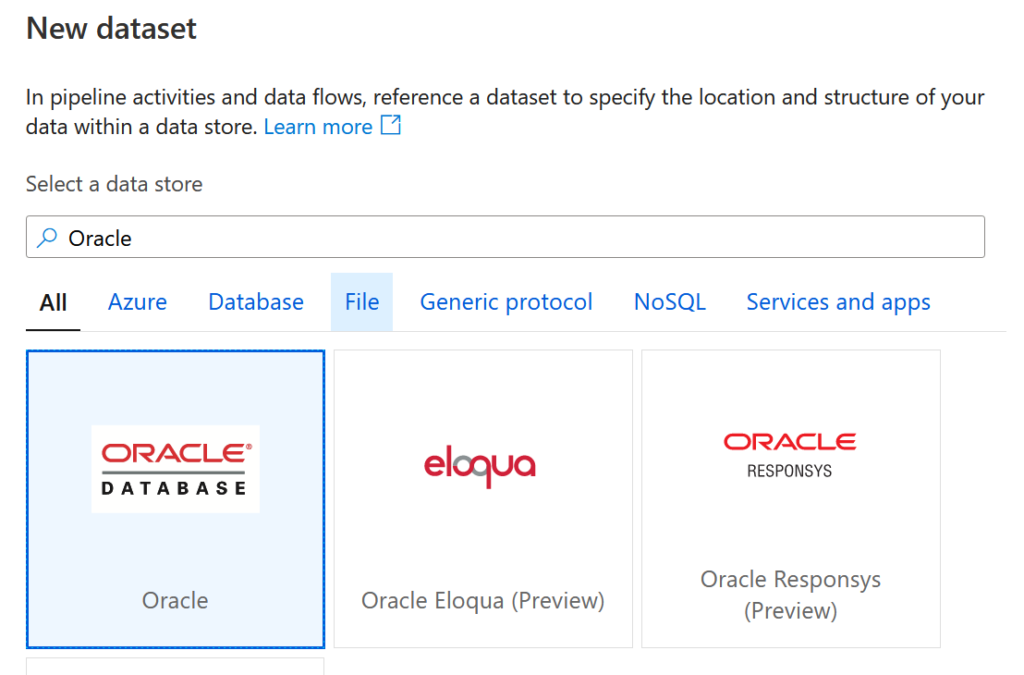

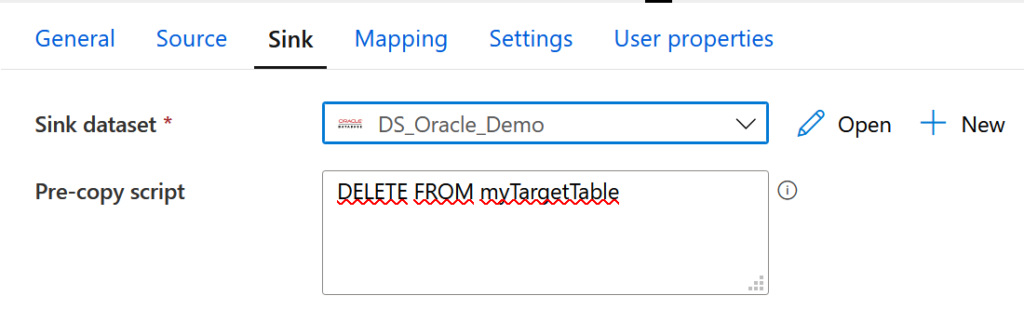

All that remains is to configure the “source” and “sink” databases. In both cases, a new dataset must be created in the corresponding tab, and Oracle selected.

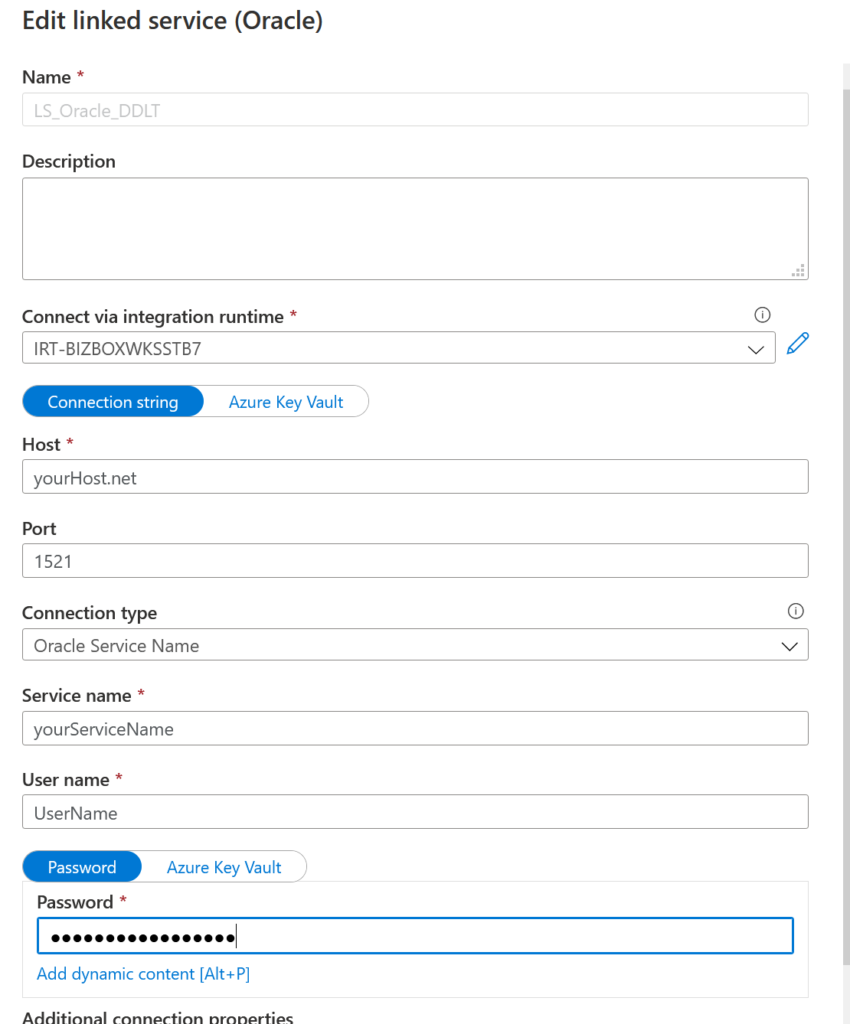

Once the dataset is initialized, it needs to be configured using a linked service that will permit us to access data through the SHIR. To do this, open the new dataset and select “New linked service”. A window opens, where the connection information to query the Oracle database has to be entered.

We previously showed a simple case where just one copy activity was used for the transfer. However, for more complex data flows, when simple SQL queries are inadequate, it may be necessary to call a procedure to manage part of the process.

ADF’s “Stored procedure” activity is mainly limited to SQL and does not allow direct interaction with an Oracle procedure. An external Azure service will therefore be used to do so. We have two basic options, either a Logic App or an Azure Function. Both work, but the second option is preferable because Oracle drivers need to be installed for the first option.

Like SHIR, our Azure Function will need a proxy to access on-premises resources. This will be a Hybrid Connection Manager. If you do not yet have one, the Azure documentation on installing it can be found here.

Once all that has been configured, all that remains is to write the Azure Function. The NuGet package called “Oracle.ManagedDataAccess.Core” will be used to interact with Oracle. An HTTP trigger will be used to call our Azure Function from ADF. The code is below; it simply accesses the database and runs a procedure that is already written.

using System.IO;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using Oracle.ManagedDataAccess.Client;

namespace fa_ScoreCards_CBR

{

public static class Call_Proc_Oracle_ScoreCards

{

private static readonly string CONNECTION_STRING = "yourConnectionString";

[FunctionName("Call_Proc_Oracle_ScoreCards")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", Route = null)] HttpRequest req,

ILogger log)

{

var orclCon = new OracleConnection(CONNECTION_STRING);

try

{

orclCon.Open();

// Creation of the Query

OracleCommand orclCmd = orclCon.CreateCommand();

orclCmd.CommandType = System.Data.CommandType.StoredProcedure;

orclCmd.CommandText = "yourStoredProcedureName";

orclCmd.ExecuteNonQuery();

orclCmd.Dispose();

orclCon.Close();

} catch (System.Exception Ex)

{

log.LogError(Ex.Message);

throw (new System.Exception(Ex.Message));

} finally

{

orclCon.Close();

}

return new OkResult();

}

}

}

Once this code has been adapted and the Azure Function deployed, its URL is retrieved. A web activity can then be called before or after the copy activity.