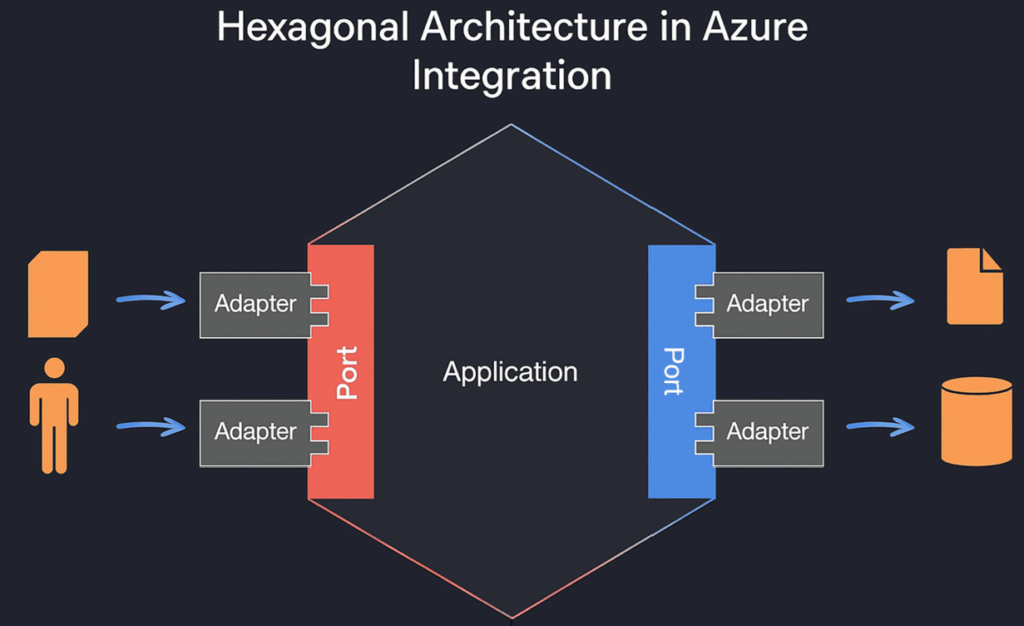

Hexagonal architecture, also known as “Ports and Adapters”, is a software design style that promotes a strict separation between business logic (at the core of the application) and interactions with the outside world (systems, databases, APIs, etc.). This paradigm is widely appreciated for developing modular and testable applications. But can it be effectively applied to data integration in an Azure environment?

That’s a legitimate question, as data integration has historically been dominated by ETL or ELT pipelines, often orchestrated using low-code/no-code tools or targeted scripts. However, the growing complexity of business needs, the emergence of event-driven architectures, and maintainability requirements are pushing us to rethink our approach.

Let’s explore three key dimensions to assess the relevance of hexagonal architecture in this context.

Hexagonal architecture enforces a clear distinction between the domain (the “core” of the application) and all technical interactions: data reads/writes, API calls, file manipulations, etc. In an Azure context, this means transformation, validation, deduplication, or enrichment logic is isolated within a domain library or module, fully independent from Azure services (Blob Storage, Event Hub, SQL Database, etc.).

This separation promotes better functional understanding of the application. It allows developers or analysts to focus on the “what” without being distracted by the “how”.

When infrastructure evolves (e.g., switching from SQL to Cosmos DB, adding a Kafka channel), tightly coupled architectures often require major rewrites. Hexagonal architecture addresses this by isolating parts sensitive to change.

If an adapter changes, it’s just a class to reimplement or move—as long as interfaces (ports) remain stable. This flexibility becomes a major asset in multi-client or multi-environment integration projects.

Each module has a clear responsibility: business logic defines what must be done, and adapters handle the technical implementation. This structure improves code readability, offers natural documentation of responsibilities, and makes code reviews more efficient.

It also eases collaboration in cross-functional teams: functional experts can focus on business logic without fear of breaking technical components.

By centralizing business logic in a pure domain (with no Azure or external dependencies), the code becomes highly testable. Unit tests can be written quickly with clear assertions on domain input/output.

For integration testing, each adapter can be tested independently: just verify it fulfills its port contract without involving the entire chain. This helps reduce side effects and systemic errors.

Instead of relying on actual Azure resources to run tests, you can inject mock adapters (mocks, stubs, or fakes). For example:

This control allows testing domain behavior in edge cases, at low cost and without a complex environment.

Imagine a new business need arises: deliver the same data to a partner API in addition to a SQL database. In a traditional architecture, this often leads to duplicated logic. In hexagonal, you simply add an output adapter that consumes existing ports.

This flexibility enables incremental system growth without technical debt.

Azure Data Factory, Logic Apps, and Synapse are powerful tools to orchestrate ETL or automation flows. But they tend to embed business logic directly in their definitions (UI or JSON), which goes against the clean separation principle of hexagonal architecture.

That said, they can be used in a disciplined way: by limiting their role to a “port” or orchestrator, they become entry or exit points that delegate business logic to external components.

For example, a Logic App can act as an input port—receiving a webhook or a Service Bus message—then calling an Azure Function or container holding the business logic. While this is more limited with Data Factory, the same principle can apply.

In short: they can call adapters or orchestrate hexagonal components, but should never contain the domain. Use them as surface integration tools, not as a business engine.

Azure Functions and their variants (Durable Functions, WebJobs) are perfect for applying hexagonal principles. You can:

This keeps the code clean, testable, modular, while leveraging Azure’s serverless capabilities.

Azure encourages decoupled architectures with services like Event Grid, Service Bus, or Storage Queues. These work very well with hexagonal adapters:

In this way, infrastructure becomes not a constraint, but a facilitator, elegantly integrated into clean design.

Using a hexagonal architecture for data integration in Azure is not only possible, but recommended, provided that:

For simple ETL orchestration cases, this may be unnecessary complexity. But in distributed, data-centric, hybrid, or multi-cloud environments, this architecture delivers the robustness, flexibility, and sustainability expected from a solid integration solution.